In virtual reality, vignetting is a handy tool to fight motion sickness, games like Eagle Flight do this and also our beloved Lucid Trips!

Lucid Trips with vignetting

But the “stock” vignette that we copy from traditional games is actually not made for in-your-face-screens. This might not be obvious in the first place, but once you see the raw vignette, it makes your eyes literally hurt.

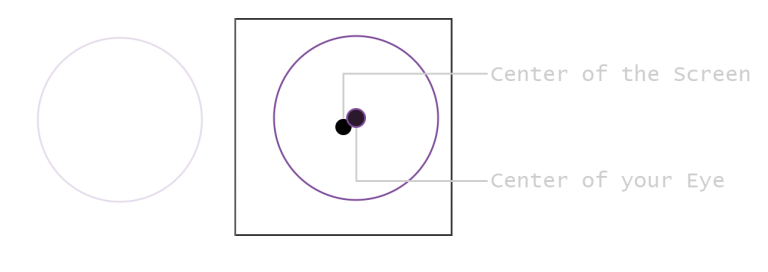

The reason is simple: the screen center, which is also center of the vignette, is not the eye center. Every Head-Mounted-Display is different here where this position exactly is:

Screen-Center vs. Eye-Center

But one can figure out the actually eye center position.

- Place a point in front of the camera. From my tests, it should not be too close. 10 units seems to be alright.

- Then obtain the screen coordinate of this point for each eye and pass it to the shader.

This works for both Single- and Multi-Pass rendering (which was the original motivation to do this).

Let’s Code!

- Unity offers

currentCamera.transform.forwardto get our cameras looking direction. Now we need to multiply the direction by our desired distance and add the world-position of our camera to it, done.

Vector3 currentCameraForward = transform.forward; //translate vignetting direction to world space position

Vector3 vignetteCenterWorldSpace = transform.position + vignetteWorldSpaceDirection * VIGNETTING_DEPTH;

- Our point is a world-space coordinate, but our vignette is calculated in viewport-space… First, we need theses helper functions:

Matrix4x4 GetStereoWorldToViewMatrix(Camera.StereoscopicEye eye) {

return currentCamera.GetStereoProjectionMatrix(eye) * currentCamera.GetStereoViewMatrix(eye);

}

static Vector4 NormalizeScreenSpaceCords(Vector4 coords) {

//normalize viewport coords from [-1, 1] [0, 1]

return (coords * 0.5f) + Vector4.one * 0.5f;

}

Now we calculate the viewport positions:

Matrix4x4 worldToClipSpace_left = GetWorldToClipMatrix(Camera.StereoscopicEye.Left);

viewportSpaceOffset[0] = worldToClipSpace_left.MultiplyPoint(vignetteCenterWorldSpace);// get world-space position as clip-space position

viewportSpaceOffset[0] = -NormalizeScreenSpaceCords(viewportSpaceOffset[0]);// convert clip-space [-1,1] to viewport space [0,1]. also negate the offset.

// the same for the right eye...

Let’s recall the pipeline which leads us to the viewport position: world-space ⇨ view-space ⇨ clip-space ⇨ viewport-space. I don’t want to get too deep into the topic here, but if your interested, I will link some resources down below. 3. Now we are ready for the shader! Assuming our vertex shader just passes in the pure screenspace uv coordinates, we first set up our pixel-shader.

float2 screenUV = UnityStereoScreenSpaceUVAdjust(i.uv, _MainTex_ST);

#if (UNITY_SINGLE_PASS_STEREO)

// in single pass, we need to figure out what eye we're rendering to by our selfs

unity_StereoEyeIndex = screenUV > 0.5;

#endif

half2 vignetteCenter = i.uv;

A vignette is basically a circle… …but smooth… we can calculate a by taking the distance to our fresh calculated eye center.

vignetteCenter += _vignetteViewportSpaceOffset[unity_StereoEyeIndex].xy; // shift to center

vignetteCenter *= 2.0; // make vignette a bit tighter

half mask = dot(vignetteCenter, vignetteCenter); // compute square length from center

Now we just need to combine it with the input…

half4 col = tex2D(_MainTex, screenUV);

return col * mask; //compose

And that’s it. Look into the Unity package for the full implementation.

Some resources: